9 Essential Ways to Build AI Model: A Practical Guide

Artificial intelligence (AI) is no longer a far-fetched concept from sci-fi movies; it’s a transformative technology that businesses and individuals are leveraging to innovate and solve complex problems. AI refers to systems and technologies designed to mimic human intelligence, such as language understanding, image recognition, and decision-making.You might be wondering how to move beyond just using AI tools to actually building your own AI model. It might seem daunting, but with a structured approach, creating a custom AI model is more accessible than ever. This guide breaks down the essential ways to build your own AI model, step by step, turning your AI aspirations into reality. Before you begin, it's important to familiarize yourself with the key concepts that form the foundation of AI, including machine learning, types of AI, and their practical applications. The process of creating AI and learning how to build an AI is now more accessible, with step-by-step procedures and user-friendly tools available even for beginners. No-code or low-code platforms allow users to build AI models without extensive coding knowledge. Beginner-friendly tools include Keras and Microsoft Azure AI, known for their simplicity. Understanding AI is crucial before starting, as it ensures you have the foundational knowledge needed for success. Building an AI model opens doors to new opportunities for data analysts and other professionals, enabling them to leverage AI for innovative solutions.

Introduction to AI Development

Artificial intelligence (AI) development is the process of building systems designed to perform tasks that would typically require human intelligence. These tasks can range from natural language processing—enabling machines to understand and generate human language—to image recognition, decision-making, and more. At its core, building an AI system involves several key steps: gathering and preparing data, selecting the right algorithms, training the AI model, and then testing and refining it for optimal performance.

AI models come in various forms, each suited to different types of problems. Supervised learning uses labeled data to teach models to make predictions or classifications, while unsupervised learning helps uncover hidden patterns in unlabeled data. Reinforcement learning, on the other hand, enables AI systems to learn through trial and error, much like how humans learn from experience. Whether you’re working on natural language applications, image recognition, or other AI projects, understanding these foundational concepts is essential for successful AI development and for creating models that can solve real-world challenges.

1. Clearly Define Your Problem and Desired Outcome

The very first step in any AI project is to pinpoint the exact problem you want to solve or the specific goal you aim to achieve. What challenge will your AI model address? What does success look like? Clearly defining your objectives and scope is crucial, as it lays the foundation for the entire project and ensures your efforts are focused. This initial phase involves understanding business requirements and translating them into a well-defined problem for your AI model.

Instead of getting bogged down in lengthy strategy cycles, consider focusing on high-impact AI opportunities that can deliver measurable results relatively quickly, as demonstrated in this real-world implementation case study where AI reduced customer support costs by 73% in 30 days. This often starts with an AI audit to map opportunities within days. The key is to identify pain points or inefficiencies where AI can provide significant value.

2. Gather and Prepare Your High-Quality Data

To build a successful AI model, you must first gather data as a foundational step. Data is the lifeblood of any AI model. You’ll need to collect high-quality, relevant data that aligns with your project’s goals. This stage involves sourcing data, which can come from various places like your company’s databases, public datasets, or third-party providers. Identifying and integrating multiple data sources is essential, and leveraging historical data can help train models to recognize patterns and predict future outcomes. It’s also important to consider different data types—such as structured and unstructured data—and to ensure that numerical data is properly managed for accurate modeling. Ensuring data diversity and representativeness is vital for building reliable AI models. The amount of data needed depends on the model complexity; simpler models may need thousands of samples while complex deep learning often requires millions.

Raw data is rarely ready for use. It often contains errors, missing values, inconsistencies, or biases. Therefore, data cleaning, transformation, and preprocessing are critical steps. Data preparation is a crucial phase that ensures your dataset is suitable for model training and evaluation. This includes tasks like handling missing data, removing outliers, standardizing formats, and potentially labeling your data if you’re using supervised learning. The quality of your data directly impacts your model’s performance – garbage in, garbage out. Cleaning data improves model accuracy and training speed, making it a vital step in the AI development process. It’s important to pay attention to individual data points, as each one contributes to the model’s learning process. Sample data can also be used for model validation or in generative models to improve output quality. Some approaches also emphasize contextual cleaning and even generating synthetic data for edge cases to improve model accuracy, rather than just focusing on the sheer volume of data collected. AutoML platforms automate the training process, including feature selection and hyperparameter tuning. Continuous data refinement should be an ongoing part of your AI development process. After collecting and cleaning data, the next step is to create algorithms that will process this data.

3. Select the Right Tools, Frameworks, and Platforms

With your problem defined and data ready, the next step is choosing the appropriate tools and technologies. Popular programming languages for AI development include Python and R, largely due to their extensive libraries and frameworks like TensorFlow, PyTorch, and Scikit-learn. Python is the most commonly used programming language for AI development, thanks to its simplicity and robust ecosystem of AI libraries. Popular tools for deep learning include TensorFlow, PyTorch, and Keras. AI frameworks are essential tools for building, training, and deploying AI models, simplifying the development process and offering automation capabilities. While R is not primarily used for AI development, it excels at statistical analysis, making it valuable for data science tasks. Traditional programming involves writing explicit code and logic, but AI development often shifts towards data-driven approaches where models learn from data rather than relying solely on manually coded algorithms.

Consider cloud platforms such as Google Cloud Vertex AI or AWS SageMaker, which offer integrated toolchains that can streamline development and deployment. For generative AI, for example, Google Cloud has documented numerous real-world applications built using its platform. Cloud platforms like AWS, Google Cloud, and Microsoft Azure offer pre-built AI services, enabling developers to leverage advanced capabilities without starting from scratch. The choice often depends on your team’s expertise, the complexity of your model, scalability requirements, and budget. Additionally, the required computing power is a crucial factor, as more advanced AI models and larger datasets demand greater computational resources for effective training and operation.

4. Choose Your Model: Pre-trained, Custom, or Fine-Tuned?

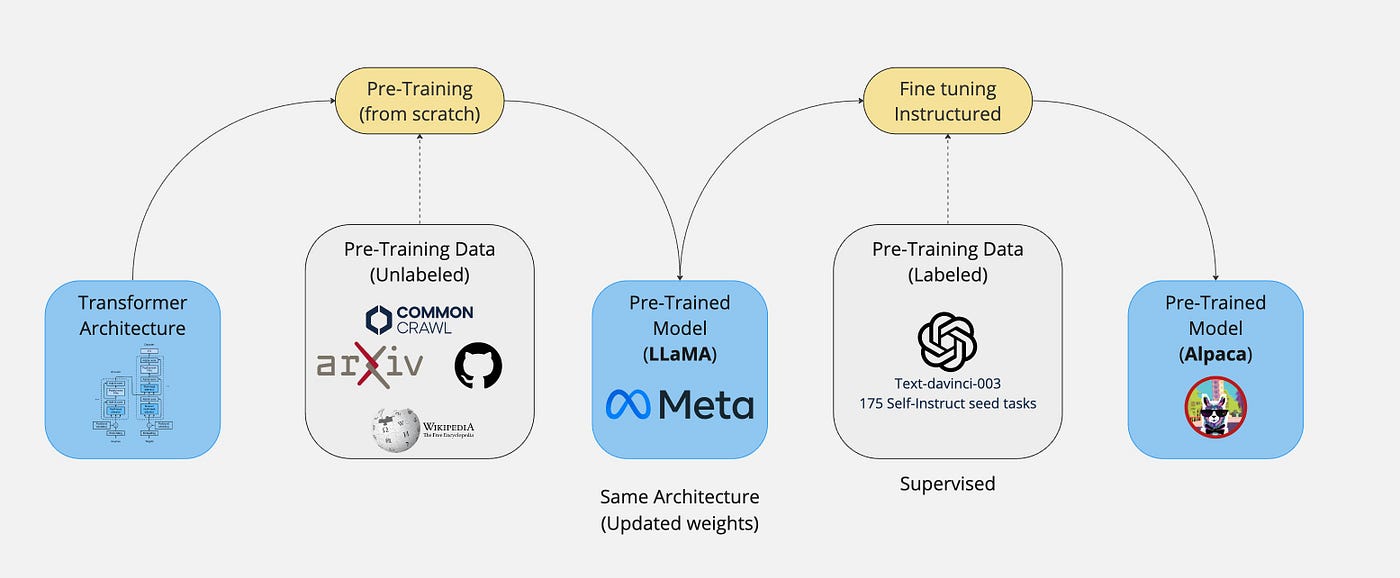

This is a crucial decision point: will you use a pre-trained model, build a custom model from scratch, or fine-tune an existing model? In some cases, AI solutions leverage multiple models using ensemble techniques to improve performance and versatility.

- Pre-trained models: These are models developed by others (like those available on Hugging Face) and trained on large datasets, often for general tasks like image recognition or natural language processing. They can be cost-effective and quick to implement, requiring fewer resources and technical expertise.

- Custom-built models: Developing a model from scratch provides the most control and allows for optimization tailored to your specific, potentially niche, business needs and proprietary data. Companies like Gofylo often advocate for custom model development services, particularly for domain-specific optimization, citing case studies where tailored AI solutions have led to significant cost reductions. This can lead to a competitive advantage and better performance for unique tasks. Building models often starts with foundation models as pre-trained starting points, which can then be adapted for specialized applications.

- Fine-tuning (Transfer Learning): This is a hybrid approach where you take a pre-trained model and further train it on your specific dataset. This can be a good balance, leveraging the power of a large pre-trained model while adapting it to your unique requirements. Techniques like Parameter-Efficient Fine-Tuning (PEFT) and Low-Rank Adaptation (LoRA) can reduce computational costs significantly while maintaining high accuracy. During fine-tuning, you can also adjust model parameters to better fit your data and objectives.

The best choice depends on factors like the uniqueness of your problem, data availability, required accuracy, development time, and budget. Regardless of the approach, tracking and managing model versions is essential for reproducibility and smooth deployment across different environments.

5. Train, Test, and Rigorously Evaluate Your Model

Once you have your data and have selected a model approach, it’s time to train models using a structured training process. This involves feeding your prepared data to the chosen algorithm, allowing it to learn patterns and relationships. The process involves splitting the dataset into training, validation, and test sets, and iteratively updating the model to improve learning.

After training, rigorous testing and evaluation are paramount. You need to assess how well your model performs on unseen data against predefined success metrics. Cross-validation and other techniques are used to accurately evaluate the model's performance and prevent overfitting or underfitting. Using k-fold cross-validation provides a robust method for evaluating model accuracy. Leave-one-out cross-validation involves training the model on all but one data point. Common evaluation metrics depend on the type of AI task (e.g., precision, recall, F1-score for classification; Mean Absolute Error (MAE) for regression). It’s important that these metrics align with your business objectives. Predictive AI models are often used to forecast outcomes in areas such as finance, healthcare, and marketing, helping organizations make data-driven decisions. Some organizations implement real-world testing protocols, such as phased production exposure and A/B testing different approaches, to continuously refine models, focusing on business outcomes like cost reduction or customer satisfaction scores.

Machine learning and machine learning ML play a central role in both the training and evaluation phases, enabling systems to learn from data and improve their performance over time.

6. Deploy and Integrate Your AI Model into Workflows

A trained and evaluated model provides no value until it’s deployed into a production environment where it can be used to make decisions or predictions. Deployment involves integrating the AI model into your existing systems or applications, with marketing-specific AI integration examples showing how platforms like HubSpot optimize content workflows. Deploying AI models is a critical step for production environments, requiring careful consideration of infrastructure, computing resources, and seamless integration to ensure successful implementation. This can range from deploying it on cloud servers, edge devices, or a hybrid setup. APIs allow easy access to deployed AI models, enabling developers to integrate AI capabilities into various applications efficiently.

Strategies like containerization (e.g., using Docker)) and orchestration (e.g., with Kubernetes) are often used to ensure consistent and scalable deployment. Consider a gradual rollout, perhaps starting with a segment of your workflows, and ensure human oversight, especially in the early stages, to facilitate smooth adoption and address any unforeseen issues. Some rapid AI implementation services, like Gofylo, offer 30-day AI deployment frameworks that include containerized integration with existing systems. On-premises deployment of AI models gives more control but requires more setup. Edge deployment puts models on devices like phones or IoT sensors to reduce latency, making it ideal for applications requiring real-time responses.

7. Establish Iterative Improvement and Human Feedback Loops

AI model development is not a one-time task; it’s an iterative process. Models can degrade over time as data patterns change (a phenomenon known as “model drift”). Therefore, continuous monitoring of your model’s performance in production is crucial. After deployment, implementing AI automation best practices for continuous monitoring ensures models adapt to changing data patterns while maintaining operational efficiency. Security updates are vital for AI systems to protect against new threats, ensuring the integrity and reliability of your AI solutions over time.

Implementing a Human-in-the-Loop (HITL) system is a powerful way to ensure ongoing improvement and accuracy. HITL means humans are actively involved in the AI system’s lifecycle, providing feedback, labeling uncertain predictions, or reviewing outputs, especially for complex or ambiguous cases. Data scientists play a key role in managing these feedback loops and retraining cycles, ensuring that the model is updated with high-quality, real-world data. This feedback is then used to retrain and refine the model periodically. This approach is core to methodologies like Gofylo’s, which incorporate human oversight and regular (e.g., monthly) model retraining cycles with fresh data to ensure continuous optimization and performance tracking.

8. Uphold Ethical AI Principles and Mitigate Bias

As AI becomes more powerful, the ethical implications of its use are increasingly important. It’s crucial to build and deploy AI models responsibly. This includes ensuring fairness, accountability, and transparency in your AI systems. Transparency in AI decision-making is crucial, especially in areas like healthcare and finance, where the stakes are high and decisions can have significant impacts on individuals and society. The potential for AI job displacement is a growing concern, making it essential to consider the societal impacts of AI adoption and to develop strategies that support workforce transitions.

AI models can inadvertently learn and even amplify biases present in the training data, leading to unfair or discriminatory outcomes. Actively work to identify and mitigate bias at every stage of the AI lifecycle. This can involve techniques like using diverse and representative datasets, implementing fairness-aware algorithms, conducting bias audits, and ensuring model interpretability (Explainable AI (XAI)). AI bias can reflect and amplify societal biases present in training data. Protecting privacy and data security is vital in AI development, ensuring that sensitive information is handled responsibly and securely. Establishing clear governance guidelines and involving diverse teams in the development process can also help.

9. Explore Real-Time Adaptation for Dynamic Performance (Advanced)

An emerging trend in AI is the development of systems that can adapt in real-time without requiring full retraining cycles, as explored in this analysis of emerging AI adaptation techniques. These dynamic inference engines analyze live operational data streams and continuously adjust model behavior. This allows for micro-tuning of parameters almost instantaneously and can lead to improved contextual awareness and self-diagnosis of accuracy issues. While more advanced, this approach can offer significant benefits in fast-changing environments, such as real-time customer service adaptations or dynamic quality control in manufacturing.

Advanced techniques such as deep learning leverage multiple layers of neural networks, inspired by the structure and function of the human brain, to learn complex patterns in data. These models excel at tasks like object detection and image classification, which are essential for applications such as self driving cars and medical image analysis. They also power sentiment analysis in natural language processing and enable the creation of innovative ai apps across industries. By recognizing patterns in diverse datasets, these advanced AI systems deliver accurate predictions and real-time responses, driving dynamic adaptation and enhanced capabilities in real-world scenarios.

Building your own AI model is a journey that requires careful planning, robust data practices, iterative development, and a commitment to ethical principles. By following these essential ways, you can navigate the complexities of AI development and create solutions that deliver real value. Whether you’re a startup looking for rapid AI-powered features or a larger company aiming for process automation, a methodical approach will set you up for success.

Best Practices for AI Development

Developing robust and effective AI models requires following a set of best practices that ensure your AI system delivers reliable results. One of the most critical factors is the quality of your training data—using high-quality data that is accurate, relevant, and representative of your target problem domain is essential for successful model training. Investing time in data collection, cleaning, and preparation will pay dividends in your AI model’s performance.

Equally important is selecting the right AI tools and frameworks. Leveraging established platforms like TensorFlow, PyTorch, or Scikit-learn can streamline the development process and provide access to advanced features for building, training, and deploying AI systems. Regularly evaluating your models and fine-tuning them based on new data or changing requirements helps maintain optimal performance. Finally, always consider the ethical implications of your AI systems, ensuring fairness and transparency throughout the development lifecycle. By adhering to these best practices, you set a strong foundation for AI success.

Common Challenges in AI Development

While the potential of AI is vast, developing effective AI models comes with its own set of challenges. One of the most common hurdles is ensuring the quality of your data. Incomplete, inconsistent, or biased data can undermine even the most sophisticated deep learning models, making thorough data cleaning and validation a top priority in any AI development project.

Model complexity is another challenge, especially when working with deep learning models that require significant computational resources and expertise to train and fine-tune. Balancing model accuracy with efficiency often means making trade-offs in architecture and resource allocation. Additionally, understanding how your AI models arrive at their decisions—known as model interpretability—can be difficult, particularly with complex neural networks. Employing the right tools and techniques, such as feature importance analysis or visualization methods, can help demystify your models and build trust in their predictions. Overcoming these challenges requires careful planning, the right tools, and a commitment to continuous learning and improvement.

Future of AI Development

The future of AI development is rapidly evolving, driven by breakthroughs in natural language processing, computer vision, and reinforcement learning. The increasing availability of cloud computing resources, powerful graphics processing units, and specialized tensor processing units is making it easier and faster to train and deploy advanced AI systems. These technological advancements are enabling the creation of more sophisticated and scalable AI models than ever before.

A major trend shaping the future is the rise of foundation models—large, pre-trained models that can be fine-tuned for a wide range of specific tasks, dramatically reducing development time and resource requirements. As AI systems become more capable and accessible, it’s crucial to prioritize responsible development practices, ensuring that AI is used ethically and transparently. By staying informed about emerging technologies and best practices, organizations can harness the full potential of AI development to drive innovation and solve complex problems.

How We Build AI in 30 Days at Gofylo

At Gofylo, we don’t believe in 6-month strategy decks or waiting until everything is “perfect” before building. Our approach is implementation-first: we embed a fractional Chief AI Officer directly into your team and go from idea to working AI in just 30 days.

Here’s how we do it:

- Week 1 – Discover: We identify one high-impact use case, audit your data, and align on success metrics—fast.

- Week 2 – Build: We prototype the core AI feature through daily iteration cycles. You see progress every 24 hours.

- Week 3 – Deploy: The model goes live in your environment. We handle integration, infra, and team onboarding.

- Week 4 – Scale: We fine-tune, train your team, and set a roadmap for what’s next.

We’ve delivered dozens of AI projects this way, and we train your people as we go—so you’re never stuck depending on outside help. If you're ready to actually ship AI (not just talk about it), learn how we work at gofylo.io.